Introducing the Turbo LLM Inference Engine

Thrilled to introduce Nolano’s Turbo LLM Engine – Turbocharging inference latency for Large Language Models (LLMs).

Large Language Models have become an integral component of modern software systems. In the world of language models, speed and efficiency are critical. For applications ranging from chatbots like ChatGPT to code/content generation tools like Jasper.ai and GitHub Copilot, the time it takes to generate a response can make all the difference.

At Nolano, we understand this and are thrilled to introduce Nolano’s Turbo LLM Engine – our answer to turbocharging inference for Large Language Models (LLMs).

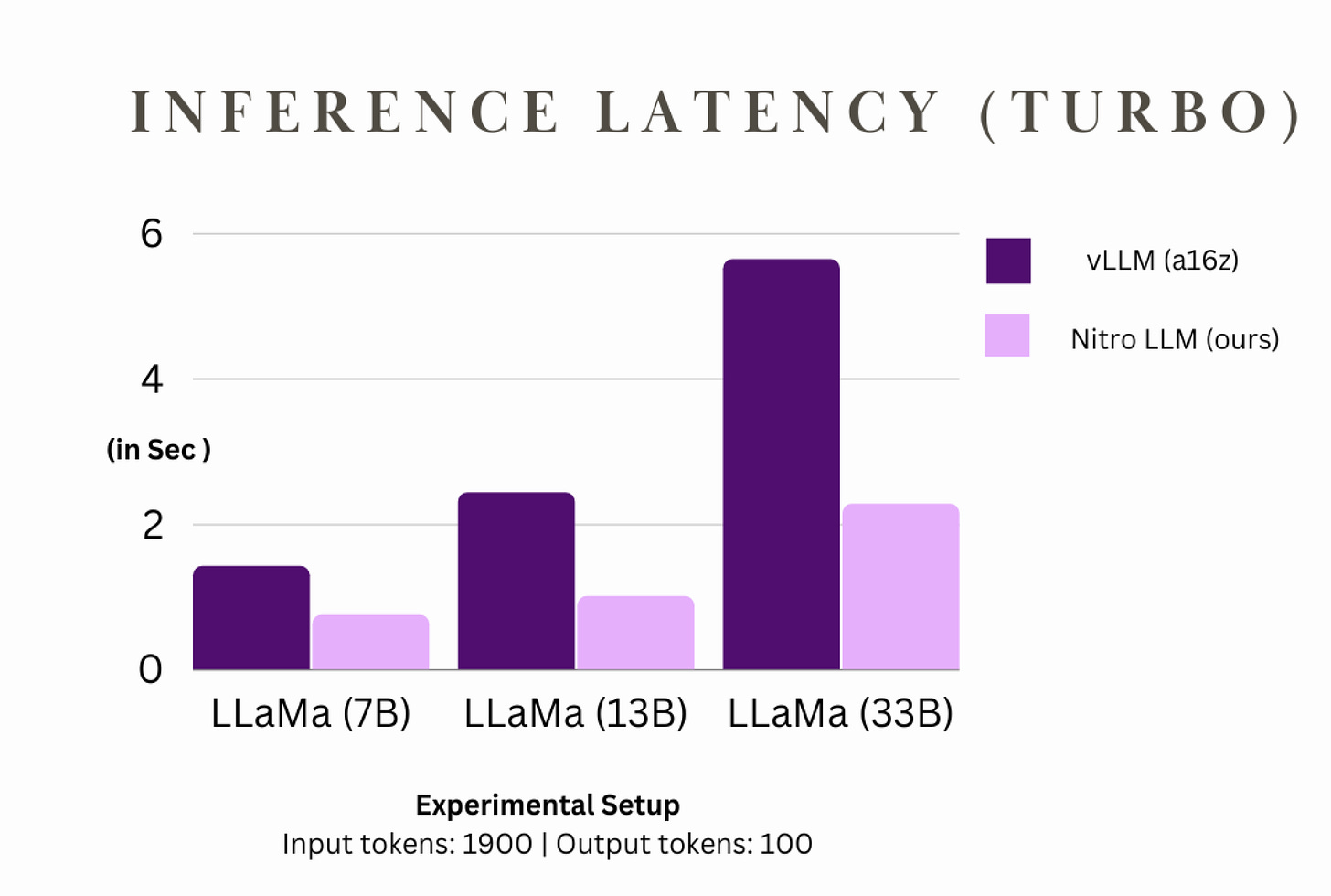

Before we dive into the performance benchmarks, it's essential to understand the experimental details:

Experimental Details

Latency Exclusions: The reported latencies are exclusive of network-induced delays, tokenization processes, and sampling overheads, focusing solely on the core inference computation.

Test Consistency: Each reported latency is the mean value derived from five subsequent runs, post the stabilization ensured by a warm-up phase. This methodology ensures minimized variance and increased reliability of the results.

Hardware & Model Specifications: Benchmarking was executed on a singular NVIDIA A100 GPU. While the target model was LLaMa 2 series, it's important to note that due to the unavailability of LLaMa 2 34B, benchmarks for this segment utilized the LLaMa 1 33B model.

In this post, we will contrast the performance of the Turbo LLM Engine with that of the library for LLM inference and serving, vLLM. Our comparison is based on:

Output Quality

Latency across various input-output token configurations

Improvement scale with model size

Benchmarking

1. Output Quality Benchmark

Before we talk about speed, let's ensure we're not compromising on quality. Based on Huggingface's metrics for the LLM Benchmark (which includes tests like ARC Reasoning Challenge, MMLU, HellaSwag, and Truthful QA)

Both inference engines exhibit almost identical performance metrics, emphasizing that output remains consistent.

2. Latency Comparison

Now, to the exciting part. How fast can each engine deliver quality responses? We've benchmarked latencies across three different input-output token configurations. Note: The latencies mentioned here exclude network latency, tokenization, and sampling overhead. Here's what we found:

a. For Input tokens: 1, Output tokens: 512

b. For Input tokens: 1900, Output tokens: 100

c. For Input tokens: 512, Output tokens: 32

The Turbo LLM Engine consistently outperforms the vLLM, showcasing an improvement of up to 3.3x in inference speed, especially for larger models.

Try out the Turbo LLM Engine today and supercharge your language models!